All models are wrong − a computational modeling expert explains how engineers make them useful

- Written by Zachary del Rosario, Assistant Professor of Engineering, Olin College of Engineering

When engineers design things, they use models to predict how the things will work in the natural world. But all models have limitations. MTStock Studio/E+ via Getty Images

When engineers design things, they use models to predict how the things will work in the natural world. But all models have limitations. MTStock Studio/E+ via Getty ImagesNicknamed “Galloping Gertie” for its tendency to bend and undulate, the Tacoma Narrows Bridge had just opened to traffic on July 1, 1940. In a now infamous failure, in the face of moderate winds the morning of Nov. 7, 1940, the bridge started to repeatedly twist. After an hour of twisting, the bridge collapsed. One fatal engineering assumption led the bridge to shake itself apart.

At the time, many designers believed that wind could not cause bridges to move up and down. That it actually can may seem like an obvious fact now, but that incorrect assumption cost about US$65 million in today’s dollars and a dog’s life.

Small vertical movements allowed the bridge to twist. Near the end, the bridge twisted in ways the designers had never anticipated. This twisting stressed the bridge until the Tacoma Narrows Bridge collapsed.

By assuming no vertical movement from wind, the engineers didn’t study how parts of the bridge would flutter in the wind before they built the bridge. This oversight ultimately doomed the bridge.

The Tacoma Narrows Bridge collapsed in 1940 because its designers assumed it wouldn’t flutter up and down in the wind, but it ended up being slender enough that the wind caused it to move up and down.University of Washington Libraries Digital Collections

The Tacoma Narrows Bridge collapsed in 1940 because its designers assumed it wouldn’t flutter up and down in the wind, but it ended up being slender enough that the wind caused it to move up and down.University of Washington Libraries Digital CollectionsThis failure illustrates an idea that many engineering students learn during their coursework: All engineering calculations are based on models. Safe design requires engineers to recognize the assumptions in their models and to ensure the design’s safety despite any limitations.

I am an expert in computational modeling, which I teach at Olin College. In my classes, I talk about models and teach engineers to use them safely.

Learning to use models carefully is important: As the famous statistician George Box said, “All models are wrong – some are useful.”

Models and their engineering use

Models are interpretive frameworks that help scientists and engineers connect data to the real world. For instance, you likely have an everyday sense for the strength of objects: If you bend a piece of wood with enough force, it will break. A stronger board can take more force.

Engineers have models that make this everyday sense more precise.

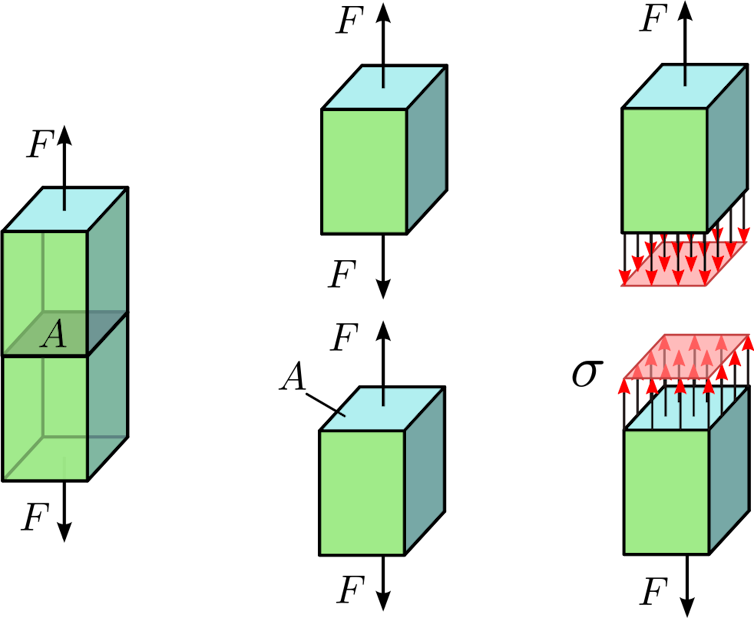

Engineering strength depends on an interpretive framework that relates forces, the size of an object and their ratio − which represents mechanical stress. What engineers call “strength” relates to this computed stress.

Considering strength helps engineers select a material that is strong enough to build a bridge.

An interpretive framework − a model − for strength, used in engineering. Force, F, and size or area, A, are used to compute stress, sigma. Sigma is then used to determine strength.Jorge Stolfi/Wikimedia Commons, CC BY-SA

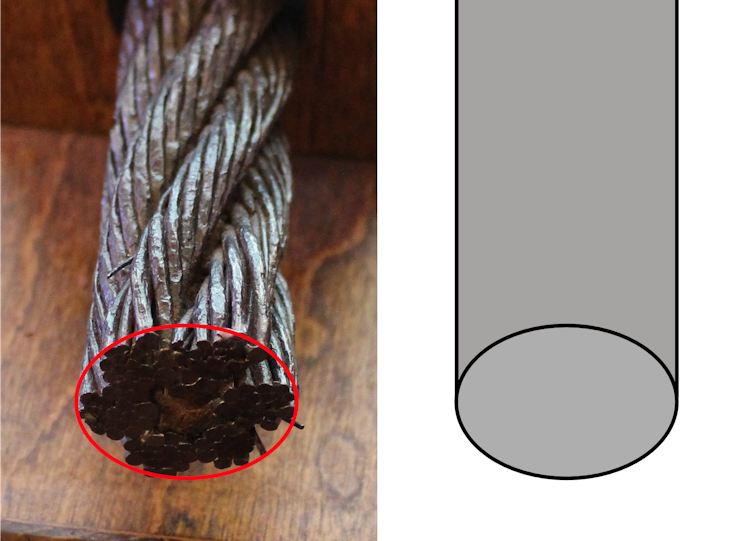

An interpretive framework − a model − for strength, used in engineering. Force, F, and size or area, A, are used to compute stress, sigma. Sigma is then used to determine strength.Jorge Stolfi/Wikimedia Commons, CC BY-SABut all models leave out details from the real world. To compute stress, an engineer needs to describe the shape of an object. Real objects are complex, so the engineer simplifies their shape for the sake of computation.

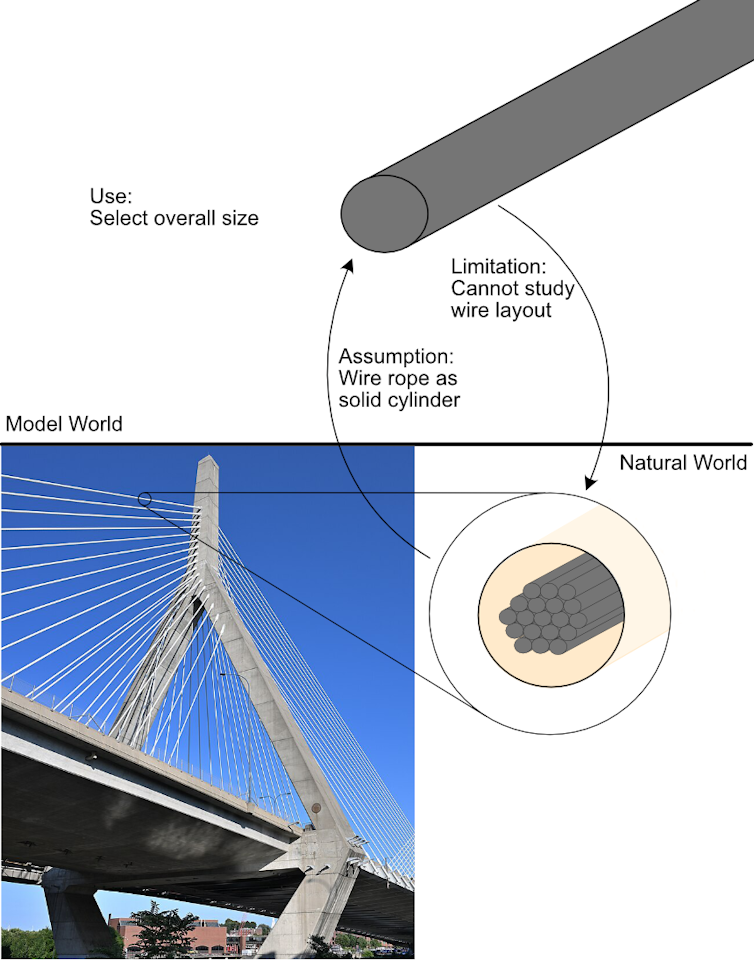

For instance, an engineer may take a complex bundle of wires and assume they act together as a single cylinder. This simplified shape may help them choose how many wires to bundle together and set the overall thickness of the bundle.

However, assumptions introduce limitations: The cylinder simplification assumes the individual wires don’t exist, so it doesn’t help determine how to weave the wires together. Engineers can − and do − make more detailed models where they need to, but even those have assumptions and limitations.

Simplification of a wire rope as an assumed cylinder. This assumption may be appropriate for choosing the number of wires, but it is wholly inappropriate for determining the arrangement of wires.HaeB/Wikimedia Commons, modified by Zachary del Rosario, CC BY-SA

Simplification of a wire rope as an assumed cylinder. This assumption may be appropriate for choosing the number of wires, but it is wholly inappropriate for determining the arrangement of wires.HaeB/Wikimedia Commons, modified by Zachary del Rosario, CC BY-SAThis interplay between assumptions and limitations is at the heart of all models. Engineers working on the Tacoma Narrows Bridge assumed no wind-driven vertical movement, which led to a limitation: They couldn’t predict the wind-driven flutter that shook the bridge apart.

The same idea holds true for more abstract models. Some companies that make facial recognition systems based on artificial intelligence assume their systems are accurate, given that they do a good job of picking out the correct face from a set of training data. However, outside researchers have shown that some training datasets introduce limitations.

The engineers who built these training datasets assumed their data had enough faces to represent most people, but these datasets underrepresented nonwhite people. This limitation led the systems to disproportionately target Black people.

In pursuit of better AI systems, some researchers assume that more training data is the most effective approach. This data-intensive approach has the limitation of an enormous environmental impact. Computing with large sets of data takes a lot of energy, since data centers are resource-intensive.

The trick to using models safely is to pick assumptions where the limitations do not ruin their intended use. The gold standard is to test. But testing isn’t always possible. For example, building a test bridge isn’t a luxury that structural engineers can afford.

Carefully selecting and creating proper models requires good judgment.

Teaching modeling

Engineering judgment involves a careful balance of trust and skepticism toward mathematics − the bedrock of many engineering models. Developing engineering judgment is difficult, and it usually emerges from years of experience. I teach a modeling and simulation course that jump-starts students’ engineering judgment.

My co-instructors and I invite students to build their own models, which is a pretty uncommon experience for engineering students. Students then identify the assumptions in their models, state their limitations and, importantly, justify how those limitations do not prevent them from safely using the model.

Example diagram of a model intended for choosing the size of a wire rope. The model is based on the assumption that the rope will be a solid cylinder. This imposes limitations on studying how the wires are woven together, but it doesn’t hinder the model’s intended use.4300streetcar/Wikimedia Commons, modified by Zachary del Rosario, CC BY-SA

Example diagram of a model intended for choosing the size of a wire rope. The model is based on the assumption that the rope will be a solid cylinder. This imposes limitations on studying how the wires are woven together, but it doesn’t hinder the model’s intended use.4300streetcar/Wikimedia Commons, modified by Zachary del Rosario, CC BY-SAEngineering failures like the Tacoma Narrows Bridge can occur when engineers are not aware of a model’s assumptions and limitations. While courses often teach young engineers to make assumptions and use models, they rarely focus on these models’ limitations. Helping students develop their engineering judgment can prevent failures like “Galloping Gertie” from happening again.

Zachary del Rosario receives funding from the National Science Foundation and Toyota Research Institute.

Authors: Zachary del Rosario, Assistant Professor of Engineering, Olin College of Engineering